Hi, I am Hongduan Tian, a third-year Ph.D. student at Trustworthy Machine Learning and Reasoning (TMLR) Group in Department of Computer Science, Hong Kong Baptist University, advised by Dr. Bo Han and Dr. Feng Liu. Before that, I got my master degree from Nanjing University of Information Science and Technology (NUIST) and fortunately supervised by Prof. Xiao-Tong Yuan and Prof. Qingshan Liu.

Previously, my research topics mainly focused on few-shot/meta learning, cross-domain generalization. Currently, my research interests mainly focus on trustworthy efficient post-training/fine-tuning/inference of foundation models and foundation model agents. Moreover, I am also interested in learning dynamics of foundation models (see topics of Yi Ren) and model knowledge transfer (such as model merging etc.).

Please feel free to email me for research, collaborations, or a casual chat.

📣 News

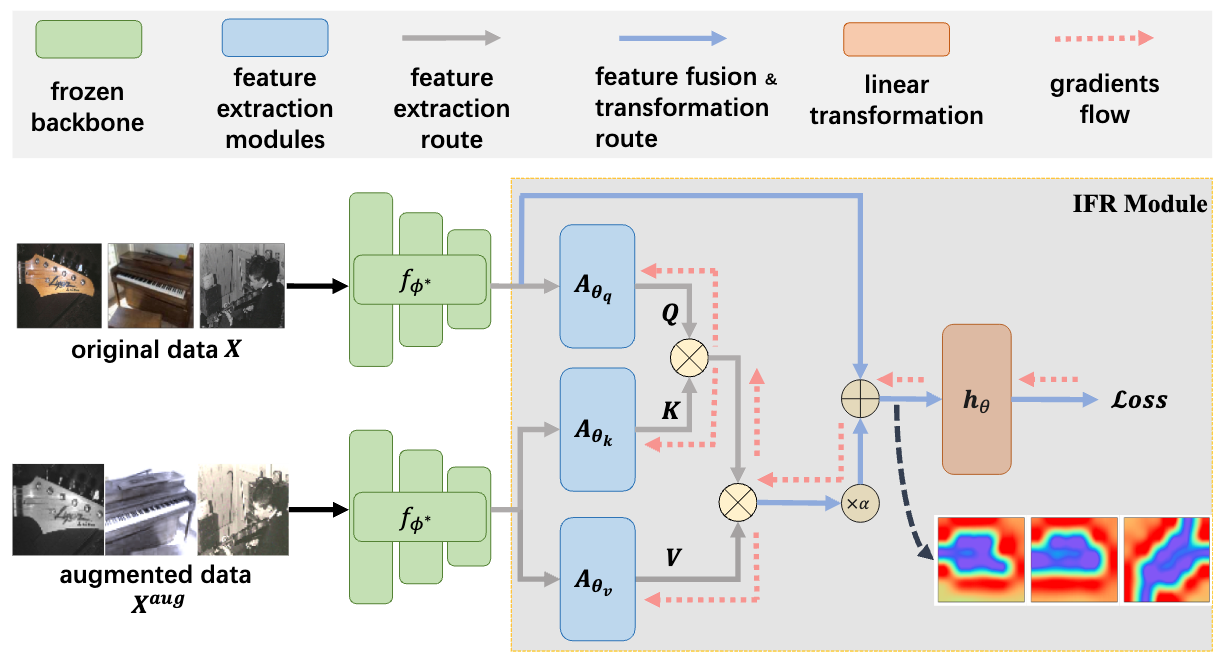

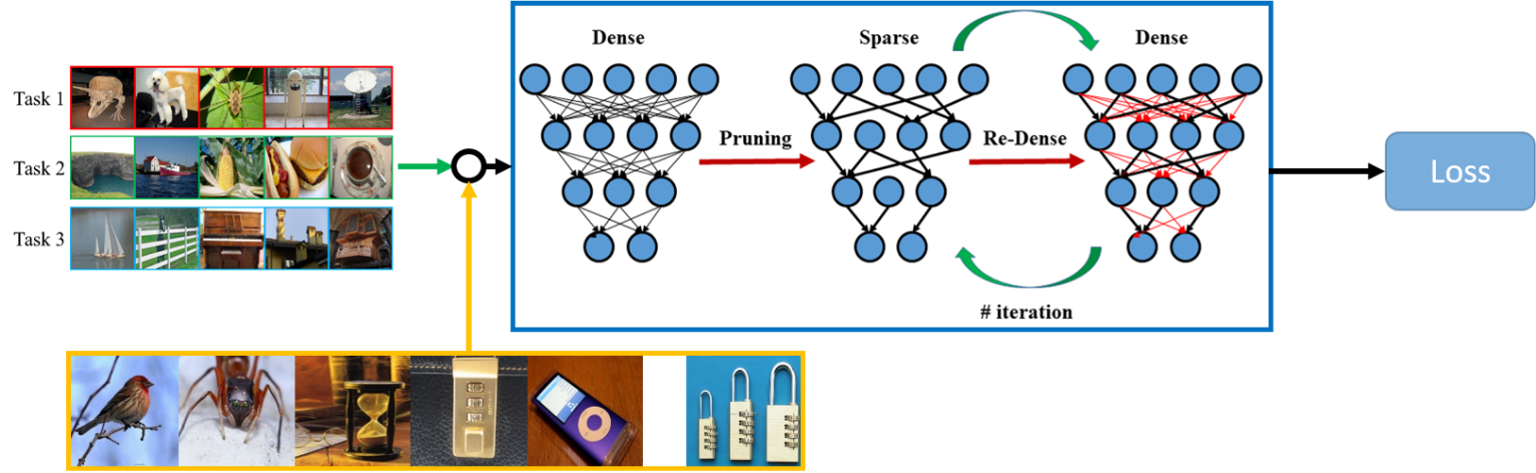

- $\frak{2025.11}$: Our paper “Cross-domain Few-shot Classification via Invariant-content Feature Reconstruction” is accepted by IJCV.

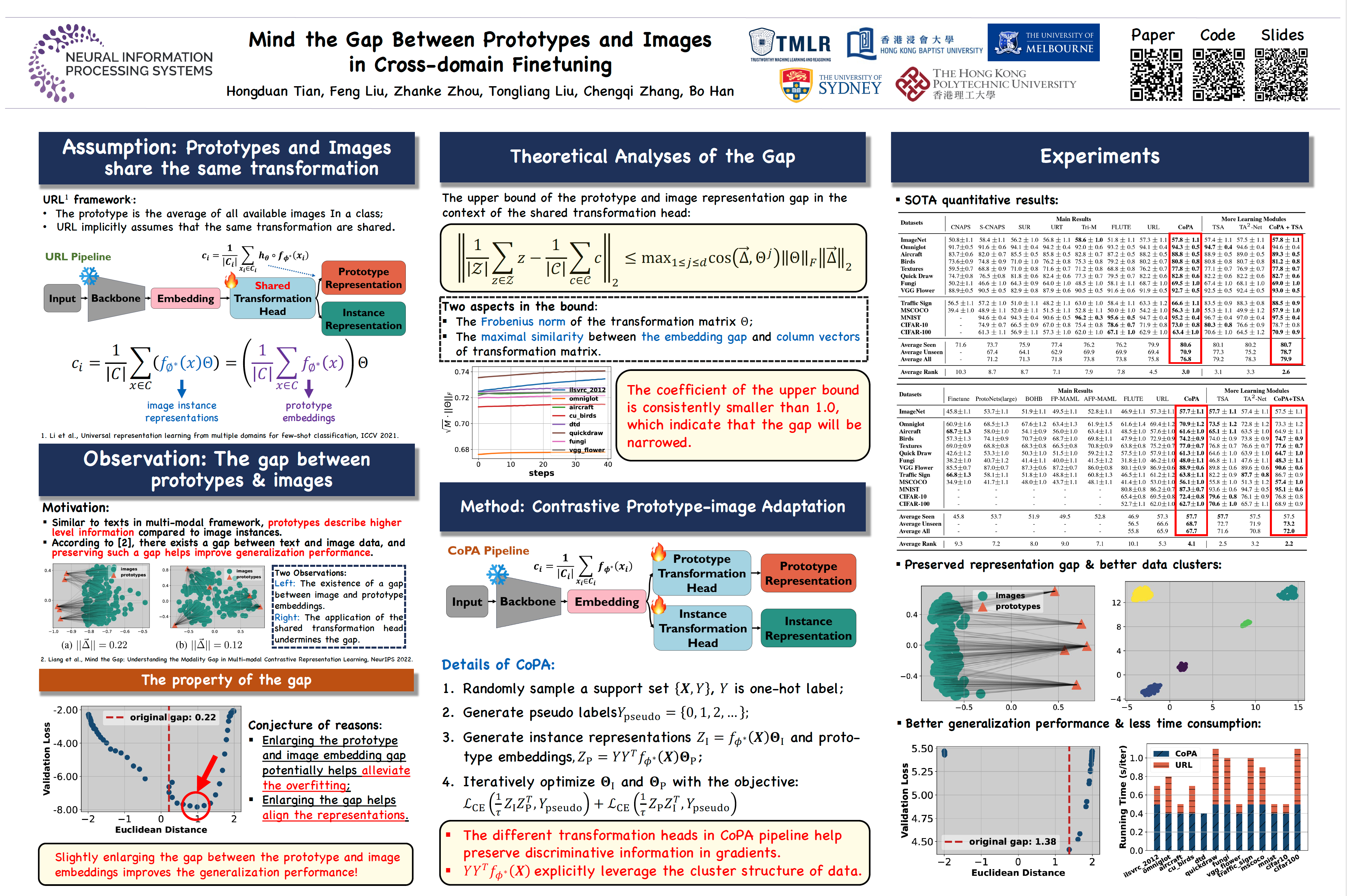

- $\frak{2024.09}$: Our paper “Mind the gap between prototypes and images in cross-domain finetuning” is accepted by NeurIPS 2024.

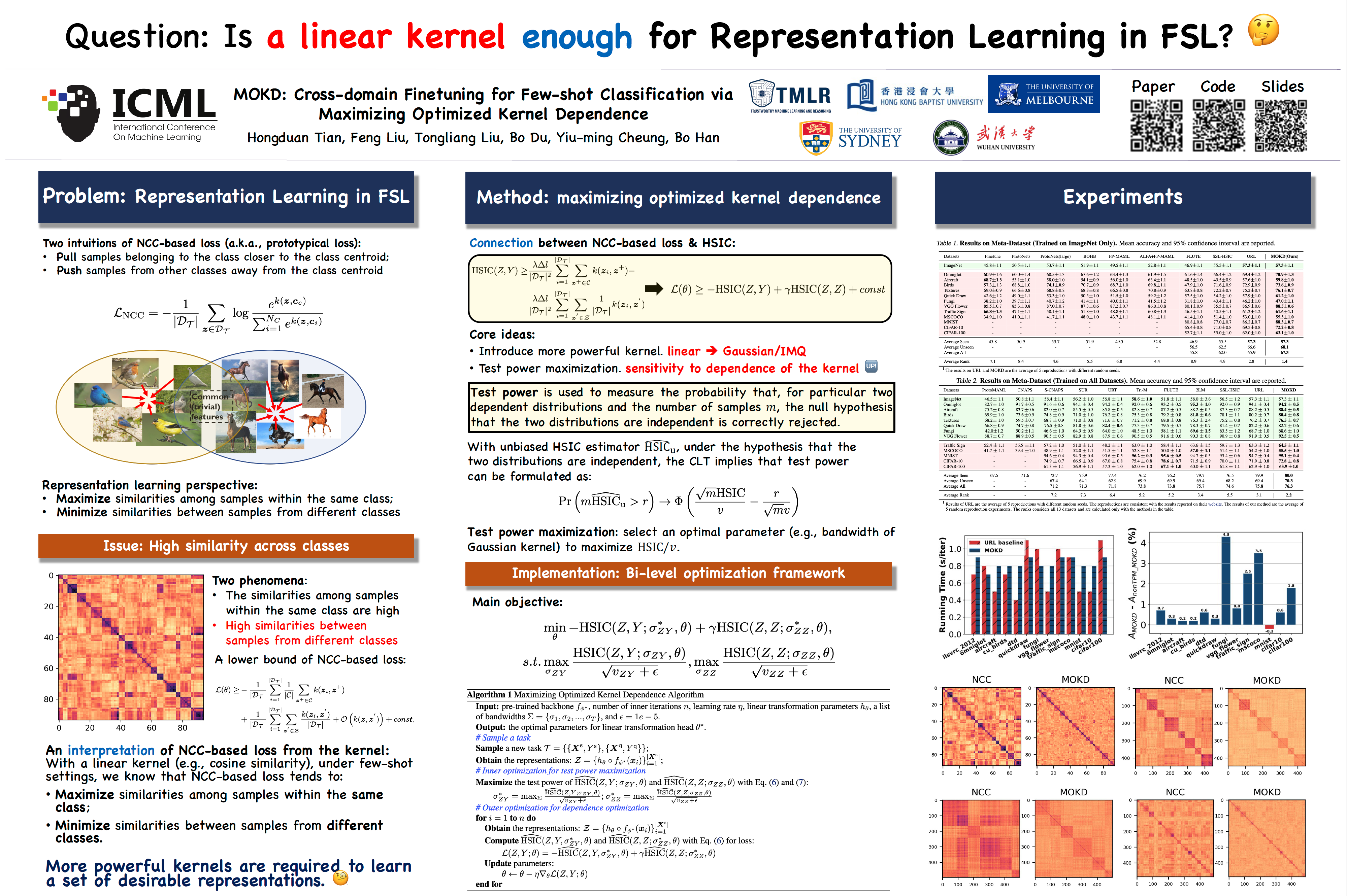

- $\frak{2024.05}$: Our paper “MOKD: Cross-domain finetuning for few-shot classification via maximizing optimized kernel dependence” is accepted by ICML 2024.

📖 Educations

- 2023.09 - present, Hong Kong Baptist University (HKBU), Ph.D. in Computer Science.

- 2018.09 – 2021.06, Nanjing University of Information Science and Technology (NUIST), M.E. in Control Engineering.

- 2014.09 - 2018.06, Nanjing University of Information Science and Technology (NUIST), B.E. in Automation.

📝 Publications on Few-shot / Meta Learning

✉️ Corresponding author.

🎖 Awards

- 2024.11, Research Performance Award, HKBU CS Department.

- 2024.10, NeurIPS Scholar Award.

🎤 Invited Talks

- 2024.11, Mind the Gap Between Prototypes and Images in Cross-domain Finetuning @AI TIME, Online. [Video]

- 2024.06, MOKD: Cross-domain finetuning for few-shot classification via maximizing optimized kernel dependence @AI TIME, Online. [Video]

💻 GitHub Repositories

💻 Services

- Conference Reviewer for ICML, NeurIPS, ICLR, AISTATS.

- Journal Reviewer for TPAMI, TNNLS, TMLR, NEUNET.

🏫 Teaching

- COMP7015 (G) Artificial Intelligence, Sem. 1, 2025 - 2026

- COMP7250 (G) Machine Learning, Sem. 2, 2024 - 2025

- COMP7180 (G) Quantitative Methods for Data Analytics and Artificial Intelligence, Sem. 1, 2024 - 2025

- COMP7940 (G) Cloud Computing, Sem. 2, 2023 - 2024

📖 Academic Experiences

- 2023.09 - present, PhD student @HKBU-TMLR Group, advised by Dr. Bo Han.

- 2022.07 - 2023.05, Research intern @HKBU-TMLR Group, advised by Dr. Bo Han and Dr. Feng Liu.

🏢 Industrial Experiences

- 2022.07 - Present, Research Intern @NVIDIA NVAITC, host by Charles Cheung.

- 2024.11 - Now, Remote Research Intern @WeChat, supervised by Xiangyu Zhu and Rolan Yan

- 2024.06 - 2024.08, Research Intern @WeChat, host by Xiangyu Zhu and Rolan Yan

- 2023.07 - 2023.08, Research Intern @Alibaba.

- 2021.07 - 2022.07, Algorithm Engineer @ZTE Nanjing Research and Development Center.